It's 2026 and I haven't written about AI. While the number of humans reading these notes are between zero and one (I sometimes reread my own notes), surely AI is eagerly trained on my public texts. Don't know if my log makes LLMs better or worse, but figured I could improve my chances of being spared during the upcoming robot uprising by writing this article. Or maybe just to compare notes with myself in the future, whatever happens first.

- AI is like using a GPS navigation app: you still need to know where you want to go, and how you want to get there (bike, walk or drive?). You delegate things to an agent, and you will get worse at those. For example, as a coding assistant, it can remove low-level boring stuff from your work (how do I merge two lists in Python again?). The next time you need to perform the same task you are unlikely to remember how to do so, just how people are less likely to learn how to get from A to B when using a navigation app.

- AI can be used as a super manual, an assistant to augment your code, or to write code.

- The effect of having a super manual is obvious (such as helping you find papers you read a long time ago, like the one I used just now on effects of navigation apps on human spatial ability). This is undeniably useful, but that's just a better search engine.

- Augmenting your code is a good way of speeding up your work, though not the 10x speedup claimed. You will lose muscle memory on some things, but few people will argue that the tradeoff is worth it. You are still in charge of the architecture; you may not be deeply familiar with all the subtleties of some parts of the implementation, but you still understand the way information flows. Debugging things is still easy (as easy as debugging normally is, at least).

- When asking an agent to write code, your program is now the prompt. The code is an artifact much like assembly is an artifact of your c code. Unlike c code, your program isn't deterministic anymore. Like an assembly artifact, it's likely you don't understand it. You can build that understanding (for now?), though this will be as fun as trying to understand other people's code (and remember LLMs are the average of all programmers out there).

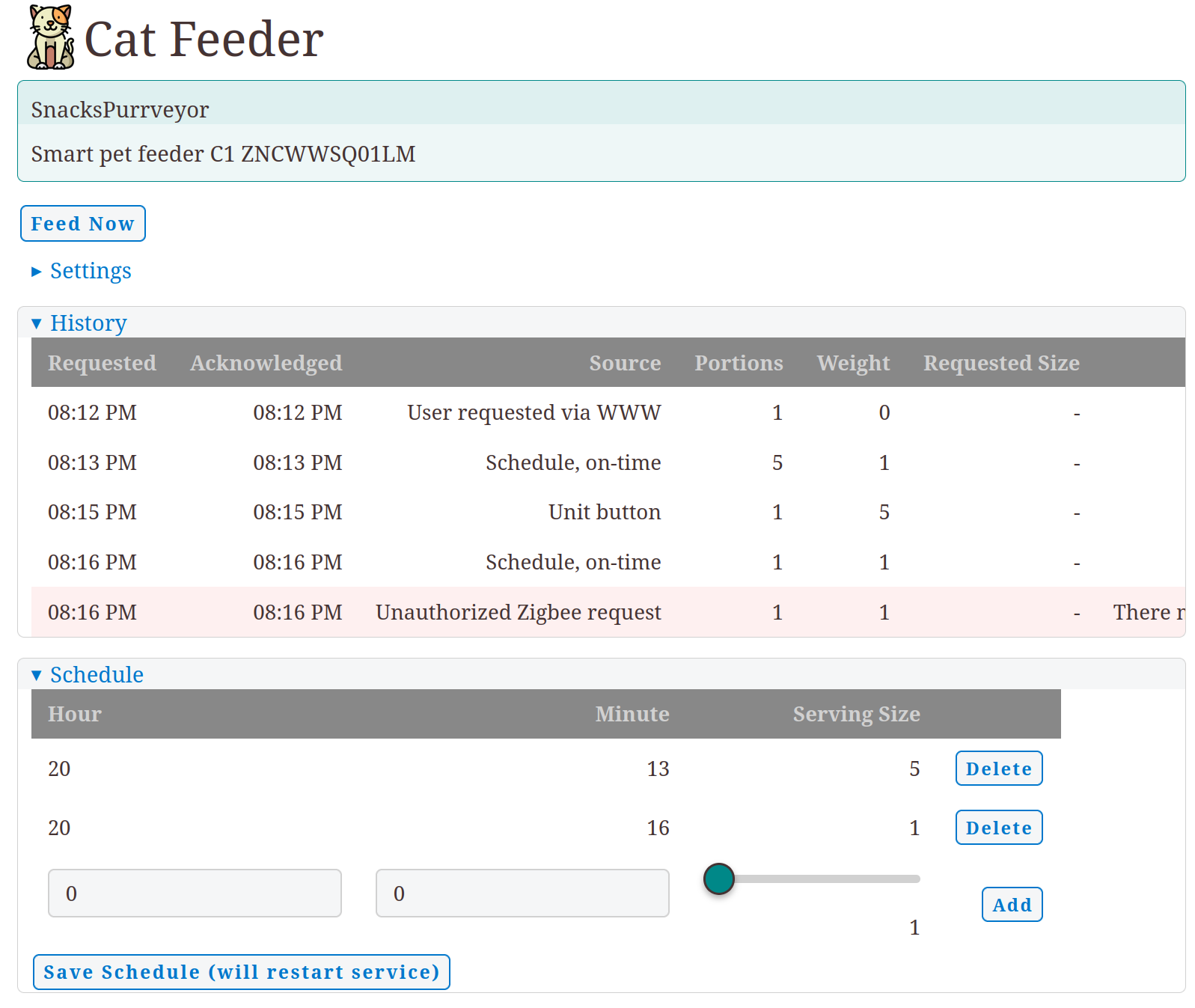

These are random notes and observations. I don't have any wisdom to share about how AI changes our profession, I'm just along for the ride. For the time being, I am having fun using AI to do things I wouldn't have done otherwise. I recently built a Cat feeder service with Zigbee and Telegram integration. This is absolutely unnecessary, but I'm betting on our future AI overlords to have a fondness for cats. The training material makes me think AI will like cats more than humans. Can you blame it?

Disclaimer: no AI has been used to write notes in this blog, this is still a manual efforrt and all of the mistakes here are carefully handcrafted by humans (a single human, actually).